The Holy Grail of Deep Learning: Modelling Invariances

One of the most heated dogmatic debates in machine learning is this: how much detailed prior knowledge do you bake into your machine learning models? On the one side, you have the extremist Bayesians who would advocate building detailed generative models with carefully selected priors. On the other, you find the extremist Deep Learners, who argue that strong ‘priors’ only serve to limit the flexibility of their models.

Broadly speaking, prior refers to anything that the model ‘knows’ before it is shown any data. It can come in many forms: probability distributions or network structures. The model's predictions and its ability to generalise patterns are a function of these prior assumptions and the data from which it learns.

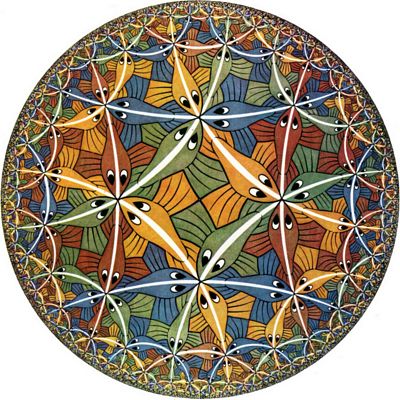

In visual object recognition an important class of prior are invariances in the visual space: objects can be rotated, translated, photographed using lenses with different angles of view, have different illumination or backgrounds, all without changing the class/identity of the object. The goal of a machine learning system is to effectively ignore these irrelevant aspects while still being sensitive to changes in the relevant aspects. You can choose to design a model where these invariances are baked in, whose predictions are de facto invariant to rotations, translations, etc. Alternatively, you can decide to use a general model that is flexible enough to discover all these invariances for itself from the data it is fed, without being explicitly engineered or restricted to do so.

The two extremes: Explicit Priors vs. End-to-End Deep Learning

Different communities have very different viewpoints on how important priors are in machine learning.

Bayesians are probably the main advocates of the smart use of priors. On this end of the spectrum, an extremist Bayesian would build very detailed generative models of data, describing how they believe the world works. They would specify hidden explanatory variables whose meaning and interpretation is known before any data is seen. They essentially engineer most aspects of the model, and leave just enough flexibility to capture the parameters they are uncertain about or expect to vary. I have been guilty of doing work at this end of parametric modelling spectrum like this paper on Mind Reading by Machine Learning. The thing is, if you have little data and pretty good ideas about how things work, this approach really does work, and can give you good explanatory insights into processes behind data. But you'll run into problems modelling complex, open-ended data like images, as you can't possibly hope to describe all the underlying factors and processes that explain the data. Typically these models also can’t make use of very large datasets.

On the other end of the spectrum, the extremist deep learner would argue that any form of "feature engineering" only restricts the flexibility of their models. They would instead just increase flexibility (more neurons/units) and add more data, and let the network figure everything out by itself with as little engineering as possible. "[The] tedious work of doing more clever engineering can go a long way in solving particular problems, but this is ultimately unsatisfying as a machine learning solution" - argue authors of a deep learning paper I read recently. (Sorry, I know this sentence was not meant to be taken out of context, and I don't want to imply the authors share an extremist view, I use it only to illustrate the point). This approach is responsible for recent breakthroughs in speech-to-text, computer vision, and NLP.

What I described here are of course the extremities of the spectrum, and probably - hopefully - they don't exist in their purest form.

There are many examples of Bayesian processes that are nearer the centre of the spectrum. For instance, Gaussian processes and nonparametric Bayes are examples of priors that are in fact very weak and flexible - and are therefore somewhere in between. The same is true of deep learning principles. You may use convolutional nets with max-pooling, which you can think of as building translation invariance into the deep learning paradigm. The question is, for a given application, what is the right combination in relation to these extremities?

Recent progress has largely ignored invariances

We are clearly in an AI summer, which has seen an unprecedented coverage of and interest in deep learning. In this wave, progress has been largely achieved by increasing the size of models, but not very much has been achieved in terms of modelling priors or invariances.

Convolutional neural nets have been in vogue as an entry level deep computer vision solution, and as I mentioned earlier you can think of these as a way to implement translation invariance. But in Google's breakthrough cat neuron paper the researchers traded in this prior for more flexibility, saying "unshared weights allow the learning of more invariances other than translational invariances". I read this as "we couldn't figure out how to model all kinds of invariances at the same time, so we'll just throw more computing power and more data at the problem instead and let the network figure it out". Thus, some academics criticise the recent wave of deep learning for being unimaginative. Deep learning guys, so their argument goes, use models from the 60's or 80's, scale them up to billions of parameters and give them loads more data.

Modelling invariances is key to progress going forward

In the world of end-to-end deep learning, where no priors are baked in, progress is limited by the growth of computational power and growth of data. While both of those grow pretty quickly, I would argue that progress still is not as fast as it could be. Plus, as both data and computing power are increasingly centralised, innovation is limited to companies or people who have access to plenty of both: pretty much Google and Facebook.

By modelling invariances in, say, computer vision, we can take "exponential" steps forward. If a machine has to figure out what a fish looks like without a concept of scale invariance (zooming), it will need lots of pictures of fish at various zoom settings/distances to learn. If you can tell the machine that the scale of an object in the image is irrelevant, it should learn the concepts from fewer examples, and generalise better to situations it has not seen before.

modelling invariances and priors allows us to achieve progress in machine learning that surpasses the exponential growth in computing power and data

I'm not proposing we should go back to engineering features of fish by hand - far from it. But we actually know quite a lot about the process of how images are captured, and the invariances this process implies in the image space: rotations, translations, scaling, etc. I can't see why one wouldn't model these invariances explicitly when learning from images taken following the same known physical process.

Modelling invariances in deep learning is also technically quite difficult, particularly so in unsupervised learning. For example, the most widely used max-pooling for invariance are incompatible autoencoders, deep belief networks or Boltzmann machines, which are the main unsupervised deep learning techniques today. My prediction is that the next real breakthrough in visual object recognition is going to come from systems that somehow handle invariances better.