The Turing Test is About UX, not Intelligence

When Alan Turing introduced the Turing test in 1950, his motivation was to obtain a formal framework in which we think about machine intelligence. He wanted to provide a definition for what it means for a machine to think. Today, as we see machines getting better at things we previously thought only humans can do, it is becoming clear that the Turing test is not, in fact, a test of general intelligence. Tricking a human observer into thinking you are a human is, as it's known, a narrow AI task. A task with a clearly defined objective that we can optimise our systems towards.

To illustrate this point, consider the Loebner prize competitions - an annual challenge that is essentially a real-world Turing test. In these competitions, several winning algorithms used tricks like mimicking human spelling mistakes, just to trick people into believing they are a person. Because of this, many AI researchers criticised the Turing test - and the Loebner prize - as being a distraction from the real goals or artificial intelligence research.

The Turing test has also received some attention in movies, two recent movies had a chance at saying something meaningful about it: Her and Ex Machina. [spoilers to follow]. Both movies featured men that fell in love with AI agents. Both films had a chance to conclude that the AI agents involved weren't actually intelligent at all, they were just really, really good at manipulating human emotions. Well, if you saw the movies, you might understand why I was very disappointed by the ending of both.

The Turing test is relevant, but not as a test of intelligence

I would argue that it's time to revisit the Turing test, but be clear about what it measures: I believe it has to do more with user experience than with intelligence. We are getting closer to implementing flexible conversational AI agents, and these agents make their way into products. It is important to have some independent benchmark that evaluates how well these different conversational AI agents do in terms of user experience. And in this case, appearing human is a crucial aspect of user experience. It is not the only relevant aspect, for example if Amy of x.ai appears perfectly human, but she keeps cancelling your meetings, that's non good UX. But appearing human is an important enough common aspect of these systems that should be tested and optimised for. The Turing test - or some modification of it - is an excellent way to test this ability. But it's nothing more. It's not a test of general intelligence, nor should we get all philosophical about it.

Machine learning needs challenging benchmarks

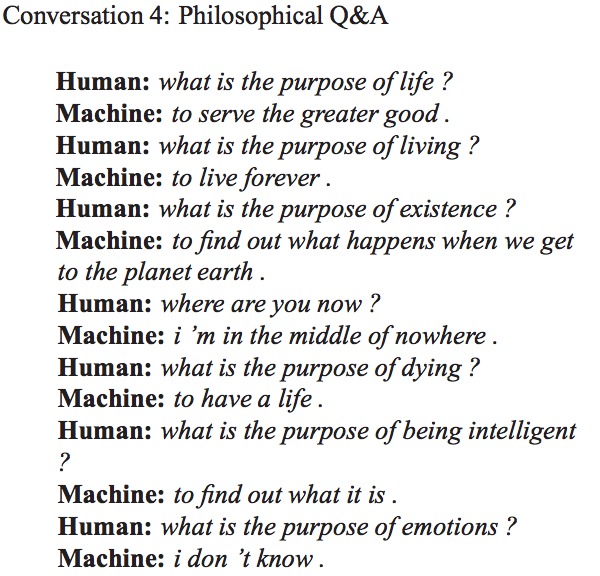

Over the past weeks, there has been a storm of papers on arXiv from different groups, each describing their take on end-to-end deep learning for conversations. This is a hot frontier of research, enabled by recent resurgence of the sequence-to-sequence learning framework based on recurrent neural networks. And these systems are already pretty cool, despite the simple models and techniques they use. Below is a snippet from a conversation with a chatbot out of Google, which the press has picked up last week.

As flexible natural language conversation systems get more and more attention, it is important that we establish a common, independent benchmark for measuring the quality of these systems or methods that underlie them. Progress in computer vision and speech recognition have mainly been driven by competitions and benchmarks like ImageNet or switchboard. To test modern conversational AI, we don't really have robust benchmarks like these. There are a number of challenges, such as the Dialogue State Tracking Challenge, the Spoken Dialogue Challenges or the Loebner prize, but these lack the scale and generality we need to make quick progress in the space.

What makes setting up a benchmark for dialogues particularly hard is the interaction with another party. Because the progression of the dialogue depends on the system's answers, we can't evaluate the system's full performance off-line. Ideally, we need a live challenge where people interact live with each system. And we need to make these evaluations sufficiently large scale, so that each time, the results are statistically significant.

As conversational interfaces become more accurate and commonplace, we also have to make sure that we track human level performance in the same conversational tasks. We take it for granted that humans can pass the Turing test, but that's because most people today expect to be talking to another person, so relatively little effort is enough to demonstrate you're not a robot. But just as CAPTCHAs are getting more and more challenging (or maybe I'm getting older), we may need to put more effort into convincing other humans that we are not robots.