Representation Learning and the Information Bottleneck Approach

I want to highlight and recommend an arXiv paper I've read a few months ago:

- DJ Strouse and David Schwab (2016): The deterministic information bottleneck

This paper is refreshingly well written, it was pure delight to read. Therefore, I won't summarise it here, it would be robbing you of the experience of reading a much better exposition. So please go and read the original, the rest of this post are just my thoughts connecting it to representation learning.

The state of unsupervised representation learning

Many people who are interested in unsupervised learning today primarily hope to use it to learn representations that are useful to solve certain tasks later. For example, classify images with fewer labels. But I think most researchers would also agree that we don't have all the answers about how to do this properly. How should we formulate the goal of representation learning in a way that can be used as an objective for learning?

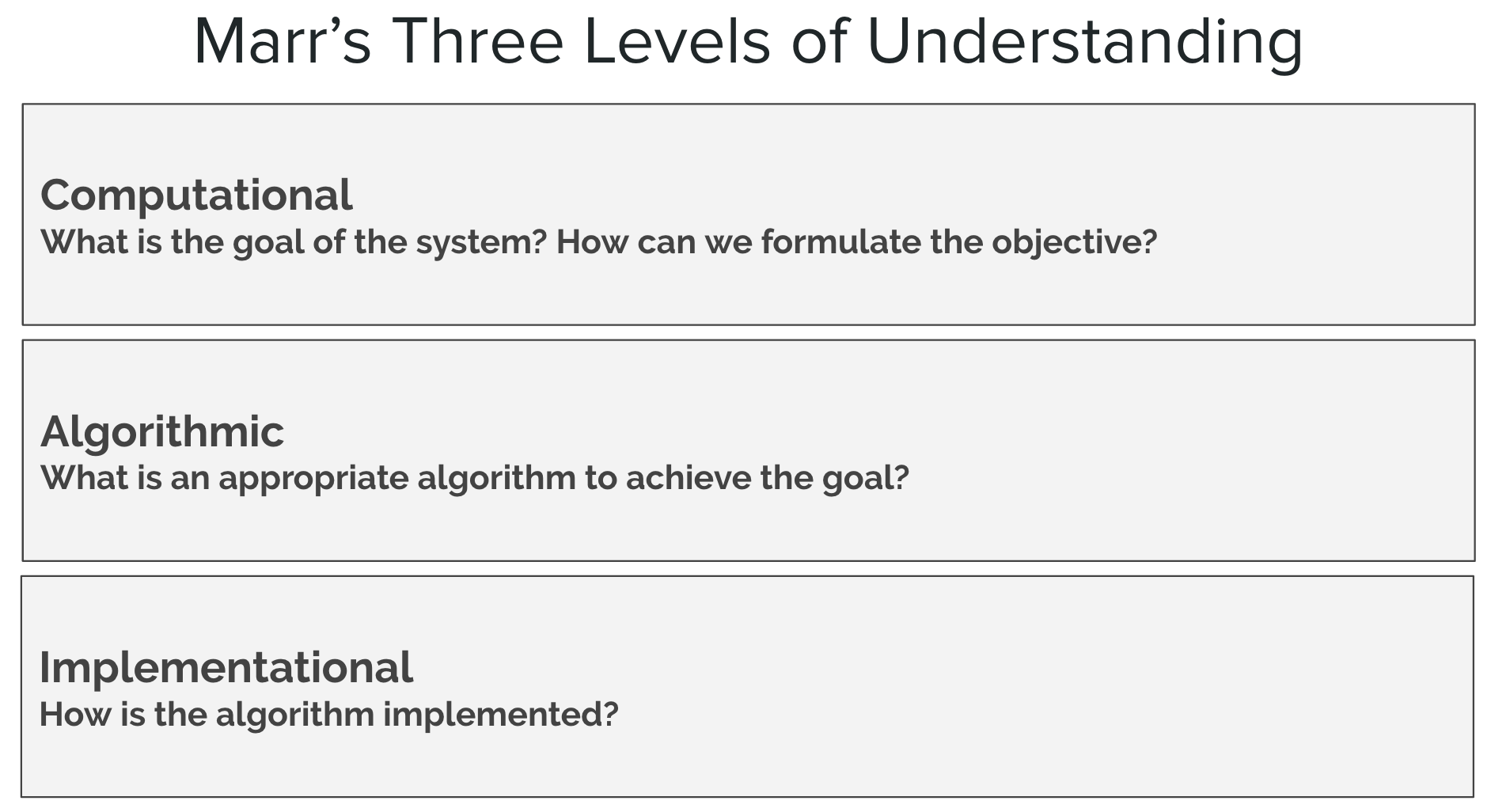

Let me put this in the context of Marr's three levels of analysis, shown in the figure below. Advances in deep learning gave us very powerful new tools for learning rich representations, especially for raw sensory signals like images. But this mainly represents progress at the algorithmic and implementation level. I believe that we still desperately need new insights at the computational level, especially when it comes to unsupervised learning.

Information Bottleneck

The information bottleneck approach is a beautifully elegant approach to representation learning: specifically for deciding which aspects of observed data are relevant information, and what aspects can be thrown away. It does this by balancing two criteria (formally expressed in Equation 8 of the paper):

- compactness of representation, measured as the compressibility: number of bits needed to store the representation, and

- information the representation retains about some behaviourally relevant variables.

I think this is what representation learning should be about at a high level. This framework encapsulates what should be the computational goal of representation learning.

sidenote: In fact, for a truly general (but arguaby less practical) framework, I personally prefer use a more general notion of information expressed terms of Bayes risks, see e.g. this paper or section 1, Eqn. 1.16 and subsection 1.3.7 of my thesis. But this is a story for another day.

The elephant in the room is, of course, that this pretty much assumes a supervised learning setting. It is assumes we know what the behaviourally relevant variables are and how they are related to observed data, or at least we have to have data to learn or approximate the joint distribution $p(x,y)$ between observed and relevant variables. The question remains how could one possibly do something like this in the unsupervised, or at least semi-supervised setting.

Representation learning: Likelihood? Autoencoders?

I'm now going to look at two popular unsupervised learning techniques and try to understand how they behave in the context of learning representations. Before I go any further, I just want to say that I'm aware that none of the stuff I write about here is new, or particularly insightful, but I think it's still important to talk about.

Maximum likelihood

Maximum (marginal) likelihood looks at the marginal distribution of observed data $p(x)$, and tries to build a model $q(x)$ that approximates this in the $\operatorname{KL}[p|q]$ sense. The representations we then use are often extracted from the conditional distributions of some hidden variables $y$ conditioned on observations $q(y|x)$. An important fact to notice is that the same marginal model $q(x)$ can be represented as the marginal of an infinite number of joint distributions $q(x,y) = q(y|x)q(x)$. You can represent a Gaussian $q(x)$ as just a Gaussian, without hidden variables, as the end-point of a Brownian motion, or even as the output of some weird nonlinear neural network with some noise fed in at the top. Therefore, unless we have some other assumptions, the likelihood alone can't possibly tell apart these different representations.

Autoencoders

This is not meant to be a crusade against maximum likelihood, the same criticism applies to other unsupervised criteria, for example, autoencoders or denoising autoencoders (DAE). DAE learns about the data distribution $p(x)$, and it will build representations that are useful to solve the denoising task. It is related to the information bottleneck in that it solves the same trade-off between compression and retaining information. However, instead of retaining information about behaviourally relevant variables, it tries to retain information about the data $x$ itself. This is really the key limitation, as it cannot (without further assumptions) tell which aspects of the data are behaviourally relevant and which aren't. It has to assume that everything is equally relevant.

To summarise, a key limitation of using unsupervised criteria for representation learning is the following:

Maximum likelihood (or autoencoding), without strong priors, is the same as assuming that every bit, each pixel, of your raw observed data is equally relevant behaviourally.

The need for either priors or labelled data

So my view is that unsupervised representation learning is really hopeless without either:

- meaningful, strong priors or assumptions about the behaviourally relevant variables, or the decision problems we want to use our representations for, or

- at least some labelled data to inform the training procedure about behaviourally relevant aspects, i.e. a semi-supervised setting.

Slow feature analysis (SFA) is a really neat example of how priors help: SFA learns useful representations by assuming that behaviourally relevant variables change slowly over time. Another often used assumptions are sparsity and independence, the history of the former was summarised in Shakir's latest blog post.

At the same time, Ladder Networks use a smart architecture and objective (a form of a prior) that allows them to solve the semi-supervised learning problem very data-efficiently (as in, using only a few labelled but lots of unlabelled examples). Through this lens, reinforcement learning can also be seen as a way of incorporating information about what is behaviourally relevant and what is not.

Final words

In summary, to learn behaviorally useful representations in a fully unsupervised way, we need priors and assumptions about the representation itself. Sometimes these priors are encoded in the architecture of neural networks, sometimes they can be incorporated more explicitly as in SFA, ICA or sparse coding.

If you ask me, the brain probably has a mixed strategy: it probably has strong priors/assumptions encoded in its architecture or learning algorithm. In fact, the slowness principle behind SFA has been pretty successful as a normative model for explaining representations found in the brain: grid cells, place cells, head-direction cells, simple cells, complex cells, etc. Here is an overview. But I would be surprised if the learned representations in a fully unsupervised way. It probably employs data-efficient forms of reinforcement learning and semi-supervised learning to inform the representations built by lower-level perception about what is behaviourally relevant and what is not.

Representation learning $\neq$ generative modelling

One key takeaway, as always, is that the way you train your models should represent the way you want to use them later. We are often not clear about these goals, and the current terminology pretty much washes together everything on representation learning, generative models and unsupervised learning. Most ML papers today focus on algorithmic, or implementation level insights and ideas. When you read these papers, particularly in unsupervised learning, you can always try and retrofit a computational level understanding, and figure out how you could use it to solve one problem or another.